Boston

Astronomy

Magnitudes ...

Do you get confused when you hear about 'magnitudes'? That's easy to do, so here is a quick explanation that may help.

Do you get confused when you hear about 'magnitudes'? That's easy to do, so here is a quick explanation that may help.

Those Pesky Magnitudes …

The system of designating stellar brightness by means of magnitude, in which brighter stars have numerically lower values, can seem arbitrary and counterintuitive to astronomy newcomers. However, understanding its origin may help to make it seem more logical.

The system dates to the ancient Greeks and Hipparchus, who designated the brighter stars as stars of the “first magnitude”, meaning, one supposes, as first in prominence or importance. Slightly dimmer stars were considered stars of the “second magnitude”, as of secondary importance. The system continued in this manner down to the dimmest stars the Greeks could see, which were relegated to “sixth magnitude”. This crude classification was the genesis of the system that, with refinements, has come down to us.

With the invention of the telescope and the beginning of celestial cataloging, the system was extended to include stars dimmer than the eye can see. It was also and extended upward to bring the Sun, Moon, and planets into the system; since these objects were brighter than ``stars of the first magnitude”, they were assigned zero and negative numbers. When accurate measurements became possible, the system was formalized in a way that reflected what was thought to be the logarithmic response of human vision to light intensity; a difference of 5 magnitudes was defined to represent a factor of 100 times in brightness. Mathematically, then, a difference of one magnitude represents a difference of the fifth root of 100.

Another qualification had to be added to the system with the advent of photographic film, which was more sensitive to blue light than the human eye. More recently, the advent of sensors that can pick up light in the infrared and ultraviolet range has complicated the situation even further. Therefore, we need to specify that the magnitudes we most often talk about in amateur astronomy are visual magnitudes – that is, intensities measured in wavelengths to which the human eye is most sensitive.

It should also be clarified that the magnitudes we are discussing are “apparent magnitudes”, or magnitudes of sources as they appear at their actual distances from us on Earth. Of course, an object of a given magnitude can be intrinsically bright but far away or inherently dim but nearby. This leads to the notion of “absolute magnitudes”, that is, the measurement of magnitude under normalized conditions; the absolute magnitude of a star is its magnitude as it would appear at a standard distance of 10 parsecs, or about 32 light-years. The subject of absolute magnitude, however, will have to be a topic for a future discussion.

In common usage, the magnitude is assumed to be positive (i.e. dimmer than magnitude 0.0) unless otherwise specified; in cases where there is a possibility of confusion, a “+” or “-“ sign can be stated explicitly.

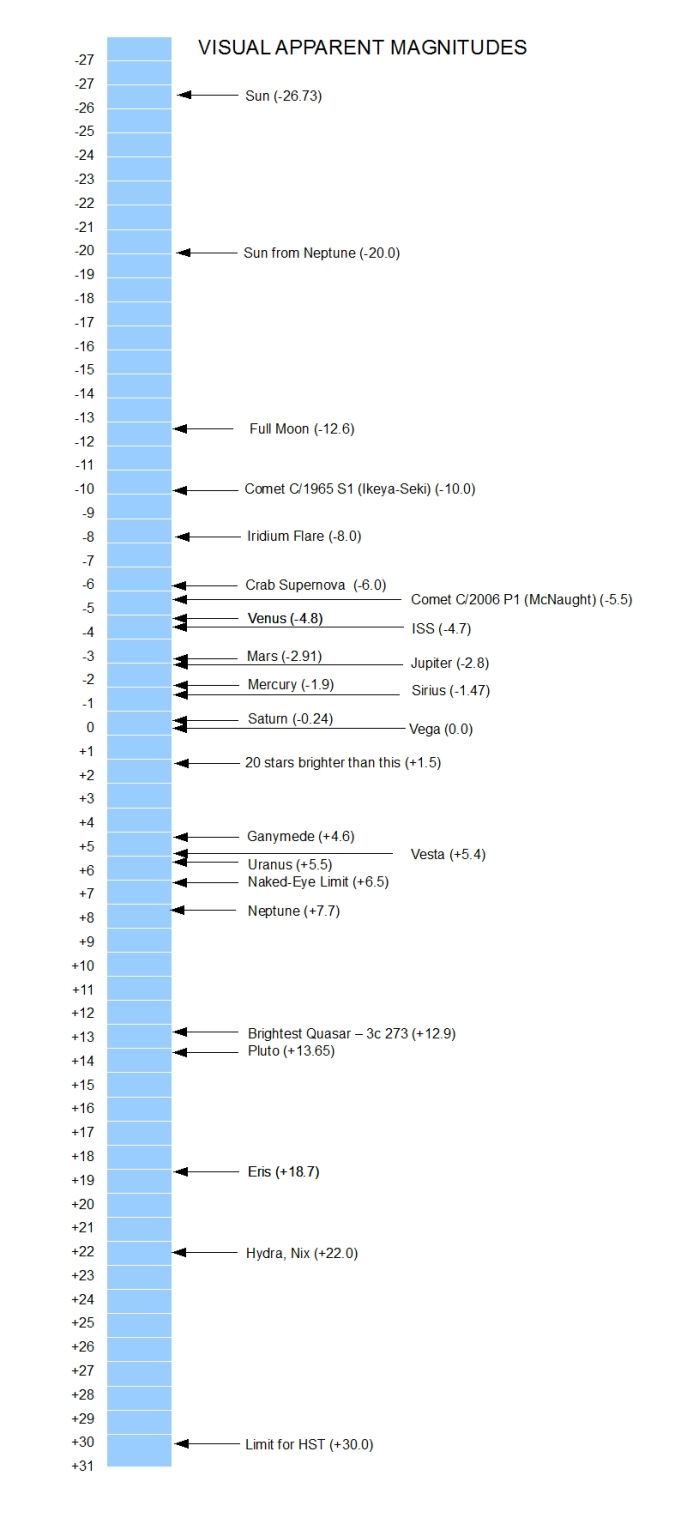

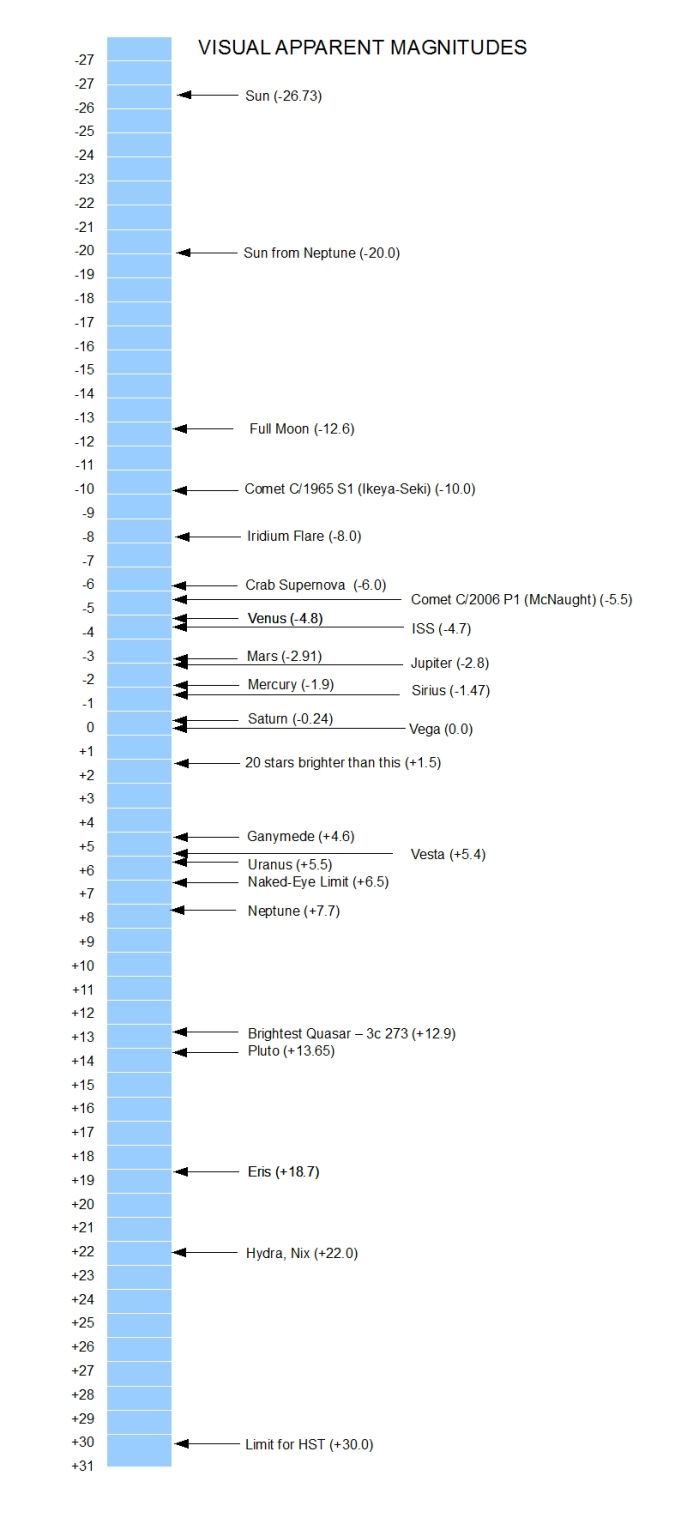

With that in mind, you can consult the graph below, which marks the maximum magnitude of some typical objects of interest to astronomers.

The Sun, of course, is by far the brightest object in our neighborhood; even at the distance of Neptune, it would still shine at magnitude -20. Note that some objects we do not usually consider to be visible to the unaided eye – such as Uranus, Ganymede, and Vesta – are in fact within the range of naked eye vision under ideal circumstances.

- John Sheff

The system of designating stellar brightness by means of magnitude, in which brighter stars have numerically lower values, can seem arbitrary and counterintuitive to astronomy newcomers. However, understanding its origin may help to make it seem more logical.

The system dates to the ancient Greeks and Hipparchus, who designated the brighter stars as stars of the “first magnitude”, meaning, one supposes, as first in prominence or importance. Slightly dimmer stars were considered stars of the “second magnitude”, as of secondary importance. The system continued in this manner down to the dimmest stars the Greeks could see, which were relegated to “sixth magnitude”. This crude classification was the genesis of the system that, with refinements, has come down to us.

With the invention of the telescope and the beginning of celestial cataloging, the system was extended to include stars dimmer than the eye can see. It was also and extended upward to bring the Sun, Moon, and planets into the system; since these objects were brighter than ``stars of the first magnitude”, they were assigned zero and negative numbers. When accurate measurements became possible, the system was formalized in a way that reflected what was thought to be the logarithmic response of human vision to light intensity; a difference of 5 magnitudes was defined to represent a factor of 100 times in brightness. Mathematically, then, a difference of one magnitude represents a difference of the fifth root of 100.

Another qualification had to be added to the system with the advent of photographic film, which was more sensitive to blue light than the human eye. More recently, the advent of sensors that can pick up light in the infrared and ultraviolet range has complicated the situation even further. Therefore, we need to specify that the magnitudes we most often talk about in amateur astronomy are visual magnitudes – that is, intensities measured in wavelengths to which the human eye is most sensitive.

It should also be clarified that the magnitudes we are discussing are “apparent magnitudes”, or magnitudes of sources as they appear at their actual distances from us on Earth. Of course, an object of a given magnitude can be intrinsically bright but far away or inherently dim but nearby. This leads to the notion of “absolute magnitudes”, that is, the measurement of magnitude under normalized conditions; the absolute magnitude of a star is its magnitude as it would appear at a standard distance of 10 parsecs, or about 32 light-years. The subject of absolute magnitude, however, will have to be a topic for a future discussion.

In common usage, the magnitude is assumed to be positive (i.e. dimmer than magnitude 0.0) unless otherwise specified; in cases where there is a possibility of confusion, a “+” or “-“ sign can be stated explicitly.

With that in mind, you can consult the graph below, which marks the maximum magnitude of some typical objects of interest to astronomers.

The Sun, of course, is by far the brightest object in our neighborhood; even at the distance of Neptune, it would still shine at magnitude -20. Note that some objects we do not usually consider to be visible to the unaided eye – such as Uranus, Ganymede, and Vesta – are in fact within the range of naked eye vision under ideal circumstances.

- John Sheff